hoi.core.get_entropy#

- hoi.core.get_entropy(method='gc', **kwargs)[source]#

Get entropy function.

- Parameters:

- method{‘gc’, ‘gauss’, ‘binning’, ‘histogram’, ‘knn’, ‘kernel’}

Name of the method to compute entropy. Use either :

- ‘gc’: gaussian copula entropy [default]. See

‘gauss’: gaussian entropy. See

hoi.core.entropy_gauss()- ‘binning’: estimator to use for discrete variables. See

- ‘histogram’estimator based on binning the data, to estimate

the probability distribution of the variables and then compute the differential entropy. For more details see

hoi.core.entropy_hist()

- ‘knn’: k-nearest neighbor estimator. See

- ‘kernel’: kernel-based estimator of entropy

- A custom entropy estimator can be provided. It should be a

callable function written with Jax taking a single 2D input of shape (n_features, n_samples) and returning a float.

- kwargsdict | {}

Additional arguments sent to the entropy function.

- Returns:

- fcncallable

Function to compute entropy on a variable of shape (n_features, n_samples)

Examples using hoi.core.get_entropy#

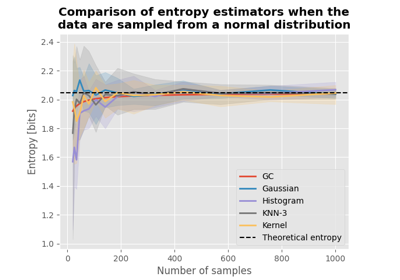

Comparison of entropy estimators for various distributions

Comparison of entropy estimators for various distributions

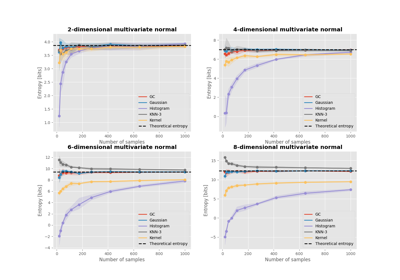

Comparison of entropy estimators for a multivariate normal

Comparison of entropy estimators for a multivariate normal

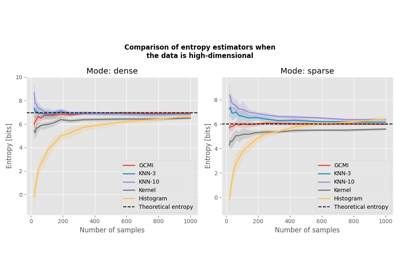

Comparison of entropy estimators with high-dimensional data

Comparison of entropy estimators with high-dimensional data

Introduction to core information theoretical metrics

Introduction to core information theoretical metrics