Examples#

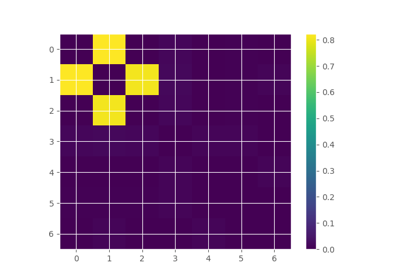

Illustration of the main functions.

Tutorials#

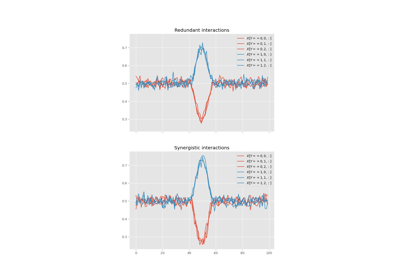

Machine-learning vs. Information theoretic approaches for HOI

Machine-learning vs. Information theoretic approaches for HOI

Entropy and mutual information#

Introduction to core information theoretical metrics

Introduction to core information theoretical metrics

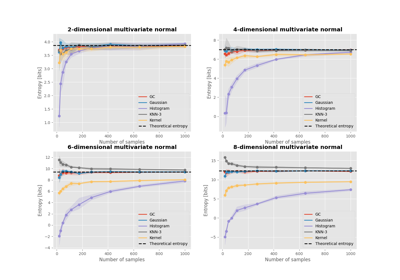

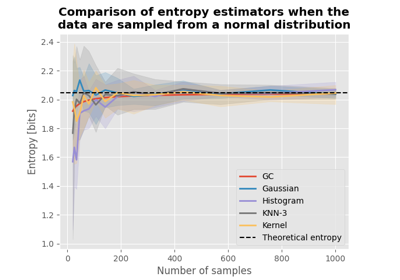

Comparison of entropy estimators for a multivariate normal

Comparison of entropy estimators for a multivariate normal

Comparison of entropy estimators for various distributions

Comparison of entropy estimators for various distributions

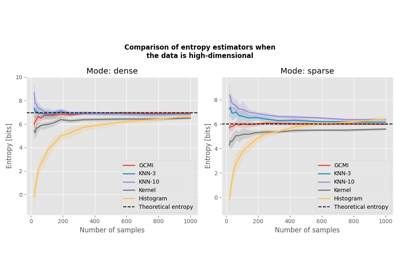

Comparison of entropy estimators with high-dimensional data

Comparison of entropy estimators with high-dimensional data

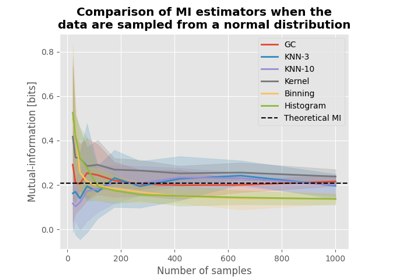

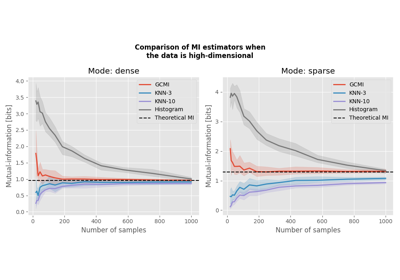

Comparison of MI estimators with high-dimensional data

Comparison of MI estimators with high-dimensional data

Metrics of HOI#

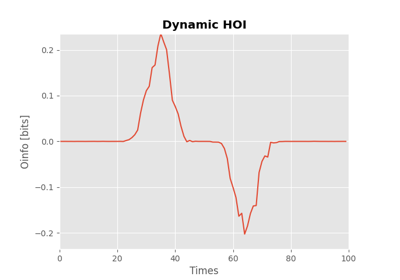

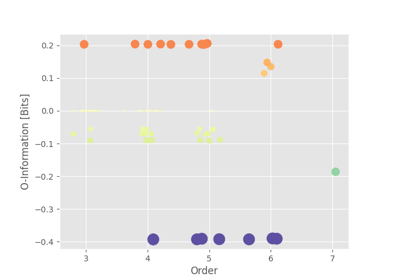

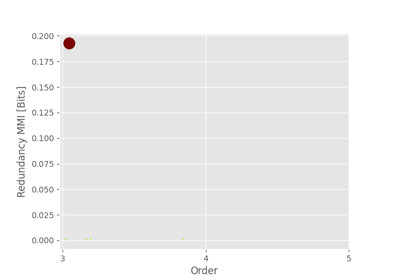

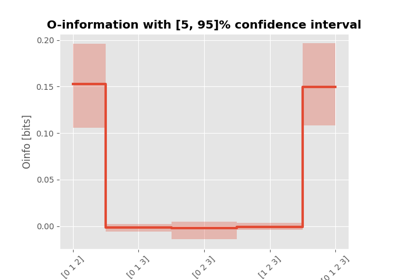

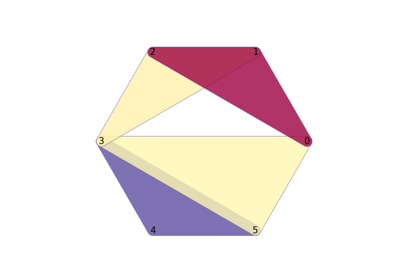

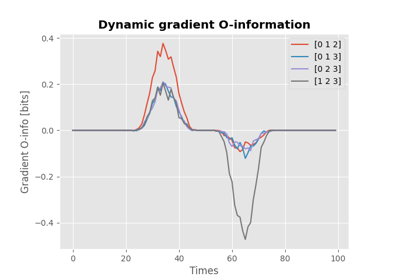

O-information and its derivatives for network behavior and encoding

O-information and its derivatives for network behavior and encoding